Human Led. Bot Amplified. The Tylenol–Autism Conversation

New Today: A closer look at how bots amplified the Tylenol–autism conversation and why this matters for every brand navigating today’s AI-shaped internet.

In late September, online conversation around Tylenol surged after the U.S. government publicly highlighted a potential link between prenatal use of acetaminophen and autism. The announcement immediately drew intense attention because it touched a set of highly sensitive themes: pregnancy, maternal guilt, pharmaceutical trust, political credibility, and public health guidance.

The discussion gained momentum as political figures and parent advocacy groups began weighing in, prompting widespread media coverage and responses from health organizations. Institutions such as the World Health Organization, the American College of Obstetricians and Gynecologists, and the Autism Science Foundation reaffirmed that existing research does not prove a causal relationship. Still, what began as a scientific clarification quickly evolved into a broader, emotionally driven conversation. Online communities fragmented into legal, scientific, and deeply personal narratives, setting the stage for a rapid surge in attention across X.

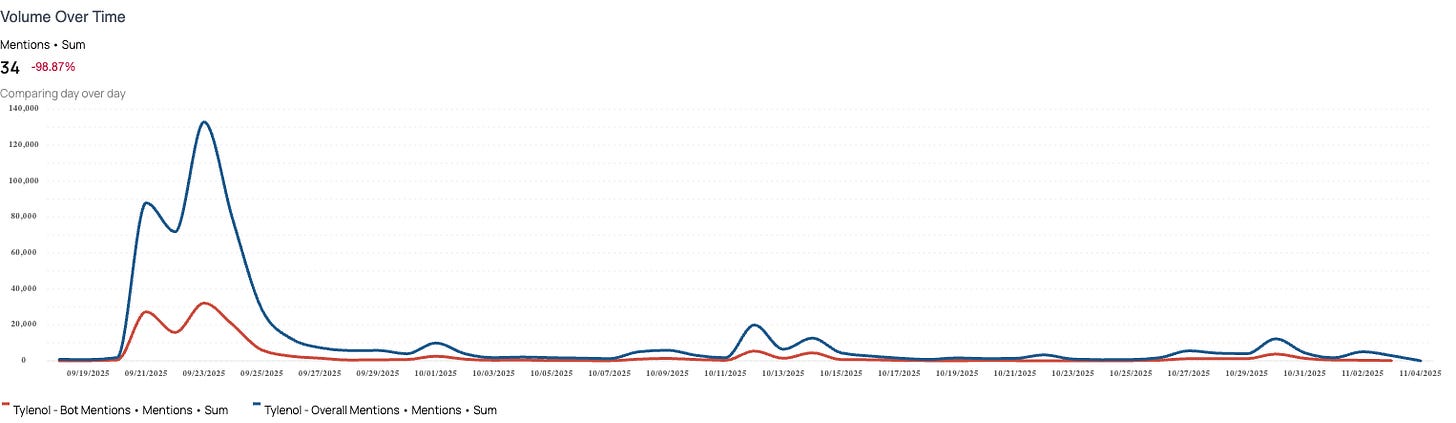

PeakMetrics analyzed a sample of 437,131 posts between September 19 and November 4 to understand how the conversation spread, where it gained momentum, and the role automated accounts played in prolonging its visibility.

How it started

In the days leading up to the joint announcement by Donald Trump and Robert F. Kennedy Jr. on September 22, speculation began circulating about what the two might reveal. When they claimed that Tylenol use during pregnancy could be linked to autism diagnoses, the topic exploded across platforms, reaching its peak on September 23rd.

How bots changed the picture

Nearly 30% of the posts on X analyzed by PeakMetrics were likely to almost certainly driven by automated accounts. While these bots did not create the narrative, but they played a noteworthy role in amplifying it. Bot activity consistently followed human spikes, trailing just behind each surge in attention.

As our director of insights, Molly Dwyer, shared with Marketing Brew, “More of the content that we’re seeing is not necessarily created by bots, but is being amplified by bots,” she said. “That’s messing with our sense of reality and what matters.”

93% of bot activity consisted of reposting existing content rather than original content—a telltale sign of amplification behavior rather than narrative creation. The clusters that emerged appeared to be tied to engagement farming, “medical freedom” networks, and litigation marketing rather than state-backed influence. In practice, that meant bots prolonged each wave of attention, making fringe claims appear more mainstream and sustaining their reach long after organic engagement would have faded.

What the conversation looked like

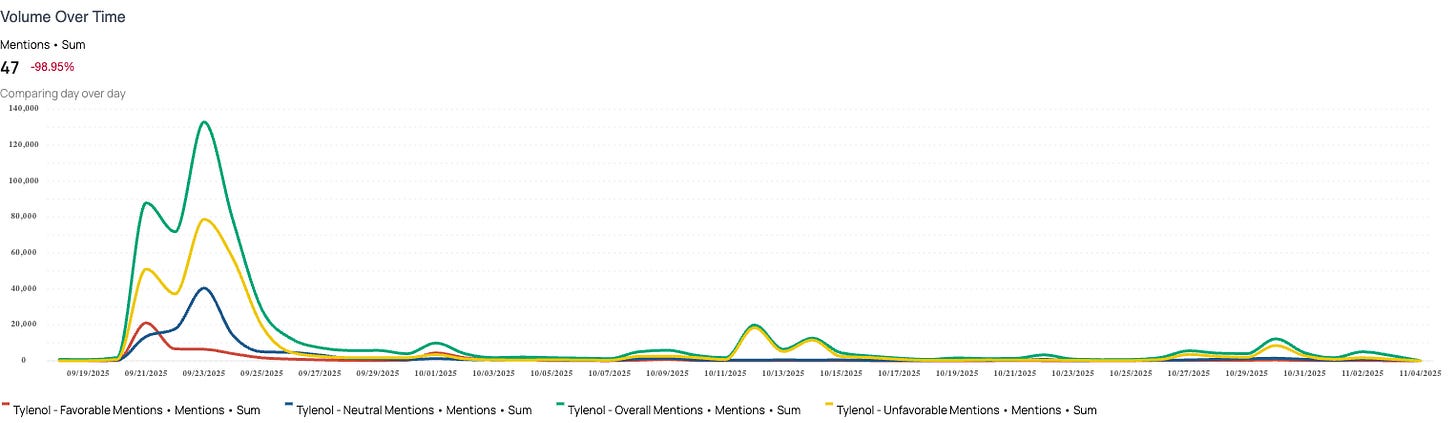

Sentiment across the conversation was predominantly negative. Overall, 65.8% of posts expressed unfavorable views toward Tylenol, 23.8% were neutral, and 10.4% were favorable. Bots were slightly more negative than human users, with 68.2% of their posts unfavorable compared with 64.5% among human posts. Corrective or favorable narratives gained occasional traction but faded quickly once amplification slowed.

The narratives that defined the debate

The Tylenol conversation was shaped by three broad groups of narratives, each reinforcing the emotional intensity of the moment.

Unfavorable narratives dominated.

A large portion of the conversation centered on claims that Tylenol causes autism, along with political commentary, distrust in public institutions, litigation recruitment, and highly emotional posts from parents expressing anger, guilt, or betrayal. These narratives traveled the furthest because they tapped into fear, frustration, and long-standing skepticism toward pharmaceutical companies and government health agencies.

Neutral narratives provided context but rarely drove engagement.

News reports, legal updates, scientific “more research needed” discussions, and public-health explainers made up a smaller portion of the conversation. These posts helped shape the informational backdrop but did not influence the trajectory of the discussion in a meaningful way.

Favorable narratives offered reassurance but were short-lived.

Myth-busting from medical professionals, peer support among mothers, and occasional posts defending Tylenol’s safety record created brief moments of balance. However, these messages rarely held attention for long without sustained amplification.

What it means

X (Twitter) already skews right, and while the conversation grew more unfavorable toward Tylenol on the platform, this didn’t drastically shift mass public opinion—it validated what people in those spaces already believed. People who were skeptical of mainstream medicine, pharmaceutical companies, and government health guidance found their suspicions confirmed. The bots amplified outrage in circles already primed to believe it.

The concern isn’t that this created new skeptics. It’s that it reinforced existing ones and gave them something concrete to point to. And here’s the bigger problem: once claims latch onto something as emotionally charged as pregnancy and child health, they spread faster than any fact-check can keep up. Corporate credibility can’t compete with fear when parents are involved.

There were bright spots—brief upticks around myth-busting content and “women supporting women” reassurance showed that empathy and third-party trusted voices can push back. However, those moments were fleeting and only effective when deployed quickly.

Overall, the bot activity didn’t flip mass opinions overnight, but it gave existing skepticism something to hold onto.

The bigger picture

The Tylenol conversation reflects a broader truth about how the internet works today. Information doesn’t spread because it’s accurate. It spreads because it’s emotional, shareable, and repeated quickly. High-emotion narratives move faster than fact-based ones, and bots or coordinated amplification give those narratives even longer life.

More teams are starting to notice this shift. It’s no longer just about tracking what people are saying — it’s understanding who is actually driving the conversation. Separating real human reaction from automated amplification helps organizations see what is genuinely taking hold versus what is being artificially inflated beneath the surface.

Bots themselves aren’t new. What is new is how easy they are to create, deploy, and monetize in an AI-driven internet. Automated networks can now scale faster, mimic human behavior more convincingly, and amplify content for financial or ideological gain with almost no friction. That’s why brands are beginning to see them show up more often — from Cracker Barrel’s logo backlash to the American Eagle campaign conversation and several recent politically charged moments. They’re becoming a structural part of how narratives spread online.

PeakMetrics helps organizations see how conversations propagate, distinguish what is real from what is engineered, and act before amplification turns perception into reality.

Want to learn more? Book a narrative strategy session here.